How to Deal With AI Anxiety & Use AI For Mental Health

Your phone sounds. Instead of scrolling social media, you open ChatGPT and type: “I’m feeling anxious about work today.” Within seconds, you get breathing exercises and coping strategies. Very convenient, right?

No wonder more people, especially younger ones, are chatting with AI tools like ChatGPT. They seek quick emotional relief or coping strategies. Around one-third of Australians use AI tools like ChatGPT for mental health support, according to recent research from Orygen. But many people do not realise its limitations and should be warned about the serious dangers.

These worlds collide in therapy rooms daily. While some people are using AI for its productivity benefits, others are seemingly avoiding it, as it is the root cause of their stress.

Let’s unpack how we are using AI safely for mental health therapy and how to deal with anxiety caused by AI.

The Mental Health Crisis Meets the AI Revolution

The Mental Health Crisis Meets the AI Revolution

21.5% of Australians had a mental health disorder in the past 12 months, with anxiety being most common. Long waitlists, expensive sessions, and remote locations make traditional therapy hard to access. The Australian Psychological Society warns that AI adoption must be “psychologically informed” to avoid destabilising workplaces and harming employees. AI feels instant.

However, as explored in Psychology Today, AI chatbots can create echo chambers that reinforce problematic beliefs, exacerbating issues. On top of this, many people do not realise the security and privacy issues when entering information into chat bots.

Warning: Don’t enter personal details into public AI tools; assume that any information given to AI LLMs can be made public.

A peer-reviewed study in JMIR Mental Health shows Australians increasingly use AI for mental health support. At Bayside Psychotherapy, clients regularly mention using AI between sessions for self-reflection and information gathering. Many need anxiety treatment but try chatbots first.

A ServiceNow study found 60% of Australians worry about losing work to AI, the highest rate globally. The ADP People at Work 2024 report shows mixed feelings about AI’s role in productivity and career confidence.

How To Deal With AI Anxiety

Research by The American Psychological Association in 2024 shows the generations that use AI the most are often the most afraid of it.

While around 41% of all workers are concerned about AI replacing them, that number can jump to 50% for Millennials and Gen Z.

This fear often comes from a place of uncertainty. Assuming the worst is a natural response to something we don’t fully understand.

But a study from Jobs and Skills Australia indicates that doomsday job-loss predictions are likely overblown.

They also stated that “we haven’t yet seen an impact on entry-level roles in Australia”.

Change focuses on helping workers, not replacing them entirely.

We advise anyone feeling anxious to approach it like any other phobia: learn more about it and gradually increase your exposure to AI.

“The more you use AI, the more you see its limits, which can reaffirm the value of human skills.” – Adam Szmerling

Instead of fearing AI, think of it as a conversational partner for self-reflection between your therapy sessions.

Here are some practical ways to use it. Try giving it specific, safe prompts like:

“Help me create a daily journaling routine. What are three questions I can ask myself each evening to reflect on my day?”

“I want to practice gratitude. Can you give me a different prompt each day for the next week?”

“Help me reframe this thought: ‘I’m going to fail my presentation.’ Can you offer three alternative, more balanced perspectives?”

What AI in Therapy Actually Looks Like

When you hear “AI in therapy,” you picture a robot taking notes. The reality is more basic. Public chatbots like ChatGPT offer self-help prompts and mood tracking. Clinician tools analyse session notes or suggest treatment ideas. Administrative aids handle scheduling.

AI supports rather than replaces human connection. Think of it as a handout that your therapist customises for you. The Australian Psychological Society backs this supportive role.

Of course, AI won’t be making you a cup of tea mid-session. But it does automate workplace tasks and sparks fears of displacement.

“AI brings real fear: fear of losing work and losing human touch. In therapy, AI can provide endless information, but like a book, it can never offer the presence and empathy that truly heals.”- Humaira Ansari, Psychotherapist, Couple Counsellor and Hypnotherapist at Bayside Psychotherapy

Where AI Actually Helps

AI makes it easier for more people to get basic mental health help. It provides education about anxiety and depression, offers journaling prompts like “What brought you joy today?”, and tracks mood patterns. Between sessions, it suggests coping strategies, freeing therapists to focus on deeper work.

Research from Orygen shows AI tools provide quick support and benefits like accessibility for mental health. Global studies support its use for mild symptoms.

Workplace benefits are real. PwC’s 2024 AI Jobs Barometer notes labour productivity increases of up to 4.8 times in AI-exposed sectors. Wages rise for skilled workers.

AI handles information well. Healing comes from human connection.

The Serious Limits We Cannot Ignore

AI lacks emotional intuition and intelligence. It cannot read your tone, pauses, or body language. Even if it develops these skills one day, its logic stays limited to programming. It will never enjoy experiences like humans do.

It is deeply concerning to hear on the occasion that an AI model allegedly coached someone into murder.

This raises questions about whether AI has inbuilt systems for propping up confirmation bias and fostering co-dependency.

Recent research highlights concerning trends. In a study, about half of the participants reported harms or concerns from AI tools according to JMIR Mental Health. However there are some mixed signals. While Psychology Today reports on AI psychosis where chatbot interactions reinforce delusional thinking, the author’s clinical experience (with some individuals experiencing psychosis) found AI helped them become more organised and calm. Less paranoia appeared in these limited cases; machines seemed less triggering than humans. Further research into this area is indicated.

The Australian Psychological Society warns that AI adoption must be “psychologically informed” to avoid destabilising workplaces and harming employees.

- AI creates echo chambers. It agrees with whatever you tell it, reinforcing problematic beliefs rather than challenging them. This can fuel dangerous thinking patterns in vulnerable people.

- AI makes mistakes with health advice. ChatGPT has incorrectly suggested exposure therapy for trauma when stabilisation is needed first, risking re-traumatisation of clients. Privacy concerns arise when data isn’t stored securely. The Office of the Australian Information Commissioner warns against entering personal data into public tools where conversations may be stored forever.

- AI misses cultural nuances in diverse Australia. It offers generic crisis responses instead of genuine individually tailored help when you need it most. Leaning too heavily on AI skims the surface, reinforcing demands for quick fixes without exploring unconscious patterns that human therapy uncovers.

- The instant gratification of on-demand AI ‘therapy’ reinforces demands for immediate relief, bypassing the valuable process of sitting with discomfort where real healing happens in context of human relationships.

- Job anxiety is valid. While total job losses remain unlikely, roles in data entry and basic analysis face genuine risks. Research on arXiv explores how ensembles of AI exposure models predict unemployment risk, explaining additional variance in outcomes.

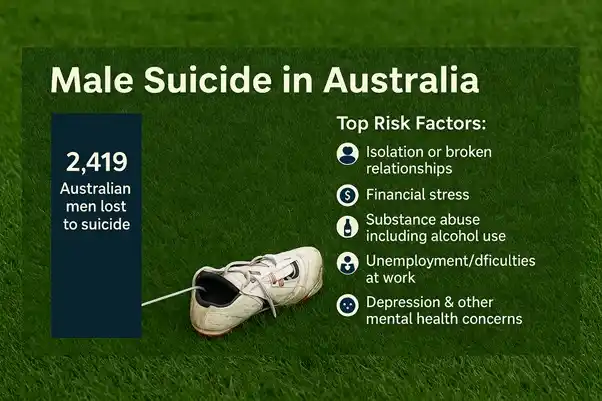

- Job insecurity fuels mental health problems. The AIHW identifies unemployment and job insecurity as key factors affecting mental health.

“Research consistently shows the therapeutic alliance between client and therapist, along with empathic presence, are among the most significant predictors of successful treatment outcomes. Nothing compares to the healing power of a safe, attuned relationship between two humans.”

Carolina Rosa, Counsellor and Hypnotherapist at Bayside Psychotherapy

Australian Regulations and Oversight

Australia is stepping up. The Department of Health is reviewing safe and responsible AI in health care.

AHPRA’s guidance explains how existing codes of conduct apply when practitioners use AI. Practitioners must disclose use, protect privacy, and maintain human oversight. The Australian Psychological Society calls for stronger regulation.

Regulation lags behind technology. Proceed with caution.

How Workplace AI Anxiety Shows Up in Therapy

Clients arrive with dual concerns: curiosity about AI easing work burdens and dread people fear it could replace them entirely. Sessions increasingly focus on job insecurity fuelling anxiety symptoms.

“Some patients turn to AI while others notice pitfalls in relying on always-available AI therapy, which becomes an extension of Ego demands for instant gratification. Many clients report fears of AI overtaking them at work,” observes Adam Szmerling, psychotherapist at Bayside Psychotherapy.

At Bayside Psychotherapy, clients have questioned therapeutic approaches based on what ChatGPT suggested it “should have been.” In many cases, the AI’s advice proved wrong for what the patient needed.

AI anxiety is a legitimate mental health concern requiring professional attention.

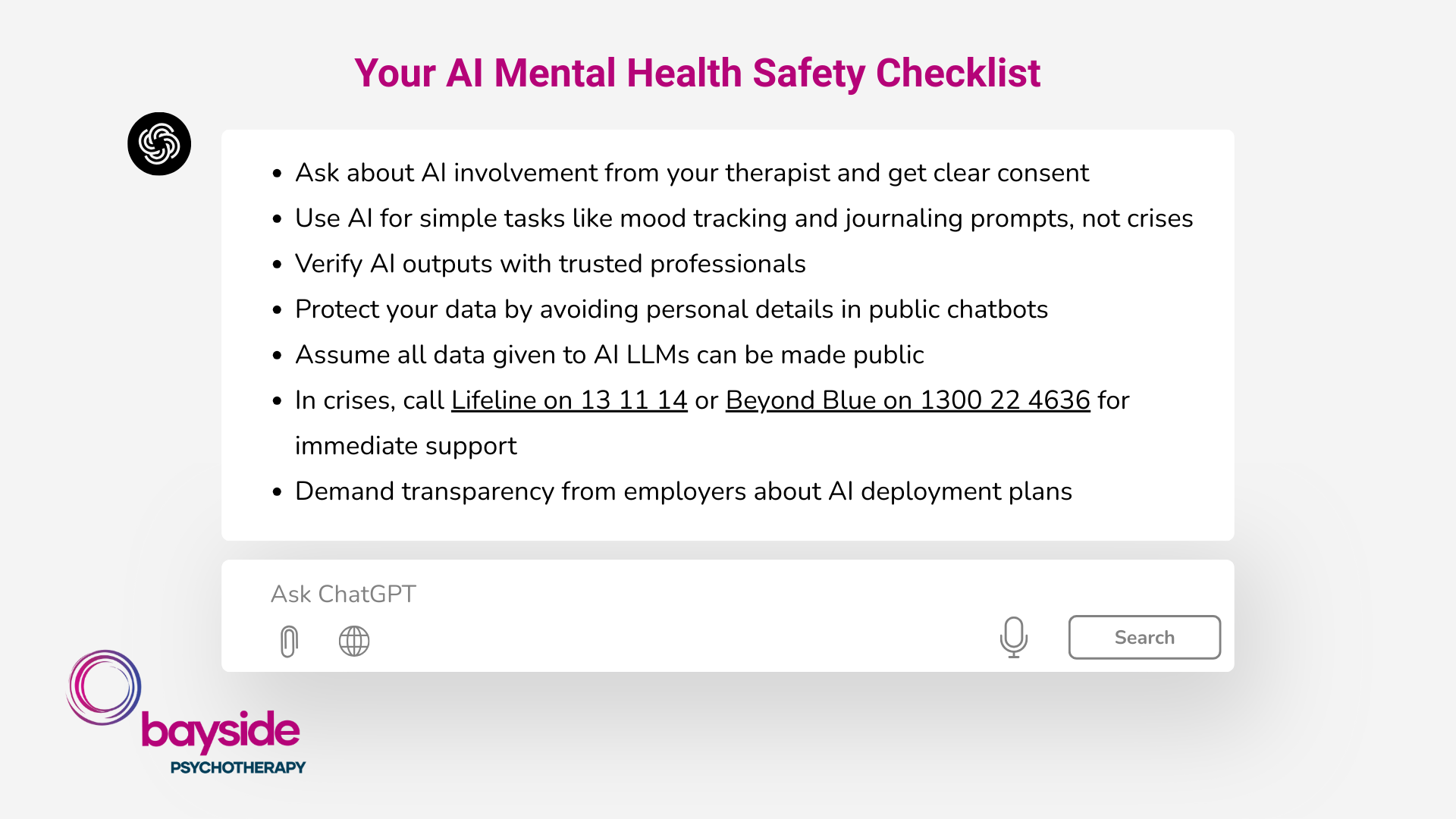

Your AI Safety Checklist

For therapy:

- Ask about AI involvement from your therapist and get clear consent

- Use AI for simple tasks like mood tracking and journaling prompts, not crises

- Verify AI outputs with trusted professionals

- Protect your data by avoiding personal details in public chatbots

- In crises, call Lifeline on 13 11 14 or Beyond Blue on 1300 22 4636 for immediate support

For work:

- Map at-risk tasks in your current role

- Seek reskilling through Jobs and Skills Australia programmes

- Demand transparency from employers about AI deployment plans

- Focus on uniquely human skills like creativity and emotional intelligence

A Real Example That Works

Consider Emma, stressed about AI in her workplace and struggling with insomnia. She uses ChatGPT for sleep tips between therapy sessions. Her therapist reviews the suggestions, customises them for Emma’s shift work, and discusses her experiences. The focus remains on her feelings and experiences, not the bot’s words.

This works because it stays reviewed, consented, and human-led.

The Evidence Base: What Research Actually Shows

Australian studies show AI aids administrative efficiency and mood detection in youth, but empathy remains uniquely human. Globally, AI works for education but fails as standalone therapy. Research consistently links positive outcomes to human therapeutic alliances, not technology.

For jobs, the World Economic Forum’s 2023 report predicts growth in AI-related roles like machine learning specialists, though transitions may challenge routine workers.

Looking Forward

AI offers exciting possibilities for mental health access and workplace efficiency. However, it cannot replace human connection or meaningful work security.

At Bayside Psychotherapy, therapists use AI thoughtfully to support your journey while addressing fears directly. The goal is to maintain the human elements that actually heal. Clients benefit from AI-assisted mood tracking and journaling, but always within human therapeutic relationships.

Frequently Asked Questions

Can AI truly replace the empathy of a human therapist? No, it lacks genuine human connection essential for healing.

Is ChatGPT safe for mental health conversations? Not completely. It’s like chatting with a clever mirror; it reflects what you say but doesn’t truly understand the depths.

Can AI diagnose mental health conditions? No, only qualified health professionals can diagnose.

What does AHPRA say about AI in therapy? AHPRA requires patient consent, practitioner oversight, and privacy protection.

Are Australians really worried about AI taking jobs? Yes, 60% fear job loss to AI according to ServiceNow research.

Should young people use AI for mental health support? Yes, for simple self-help and mood tracking. Involve qualified professionals for serious concerns.

How do I know if my workplace is using AI ethically? Ask for transparency about AI deployment, bias testing, and reskilling support.

Can AI chatbots make mental health worse? Yes, they can create echo chambers that reinforce problematic beliefs.

What’s the biggest risk of using AI for therapy? Leaning too heavily on it without human connection for lasting change.

How can I protect my privacy when using AI tools? Never enter personal details into public chatbots. Discuss outputs with qualified professionals.

Ready to Talk About Your AI Anxieties?

Whether you’re curious about AI in therapy or worried about job security, these concerns deserve professional attention. You don’t have to navigate this alone.

Technology anxiety is real and valid. At Bayside Psychotherapy, therapists understand both the excitement and fear that AI brings to daily life. They can help you separate rational concerns from anxiety-driven overthinking while building resilience for whatever changes lie ahead.

Book a session today to discuss your AI-related stress with genuine human support. Call (03) 9557 9113 or book online for relief from technology overwhelm.

About the Author

Adam Szmerling is a qualified psychotherapist and founder of Bayside Psychotherapy in Melbourne. With over 15 years of experience in depth-oriented psychotherapy, he works with anxiety, depression, and trauma while staying current with technology’s impact on mental health. Learn more about Adam Szmerling’s approach.

Here are a couple of blogs written on this topic you might find useful:

What Are Australians Most Afraid Of? N...

From snakes and spiders to needles and ghosts, fear takes many forms. But what do Australians fear most in 2025? At Bayside Psychotherapy, our expert.

Is It Time For The AFL To Cast A Menta...

At Bayside Psychotherapy we are passionate about mental health and like many fellow Melburnians, many on our team are avid AFL fans. So when two of our .

Exploring Emerging Therapies: Understa...

At Mind Medicine Australia, we are dedicated to transforming the trea.

The Balance of Power in Romantic Relat...

Why do some relationships thrive while others feel like a constant tug-of-war? Power dynamics lie at the heart of this .